Relying on No Code Builders: The Appeal and the Pitfalls

The ability to build a website without coding has revolutionized the way people approach web development. No code platforms such as Lovable, Base44, Durable, Mixo, and Framer AI Sites have become the best website builder without coding options for anyone lacking technical expertise or the patience to learn HTML and CSS. You can launch a site in hours, drag-and-drop layouts, fill out a few forms for metadata, and the interface tells you everything is complete. The promise is alluring: painless site creation, built-in SEO optimization, instant launch.

Yet, there is a dangerous assumption lurking beneath the surface, one that threatens the visibility and long-term viability of thousands of sites created through no code builders. The belief is that if the platform shows a field for a page title, a meta description, or a sitemap, these elements are properly implemented and discoverable by search engines and AI chatbots. For many, the distinction between what’s shown in the dashboard and what exists on the live site blurs, undermining the purpose of SEO optimization from the outset.

Consider this: a business owner spends an evening launching a new site with a no code platform. The platform claims to support structured SEO, sitemaps, and other SEO aeo essentials. A sitemap appears in the dashboard, a meta description field is completed, and even AI-driven suggestions pop up. Ownership feels assured, the website is “SEO-friendly.” However, when search visibility fails to materialize, the realization dawns that all this might be a mirage.

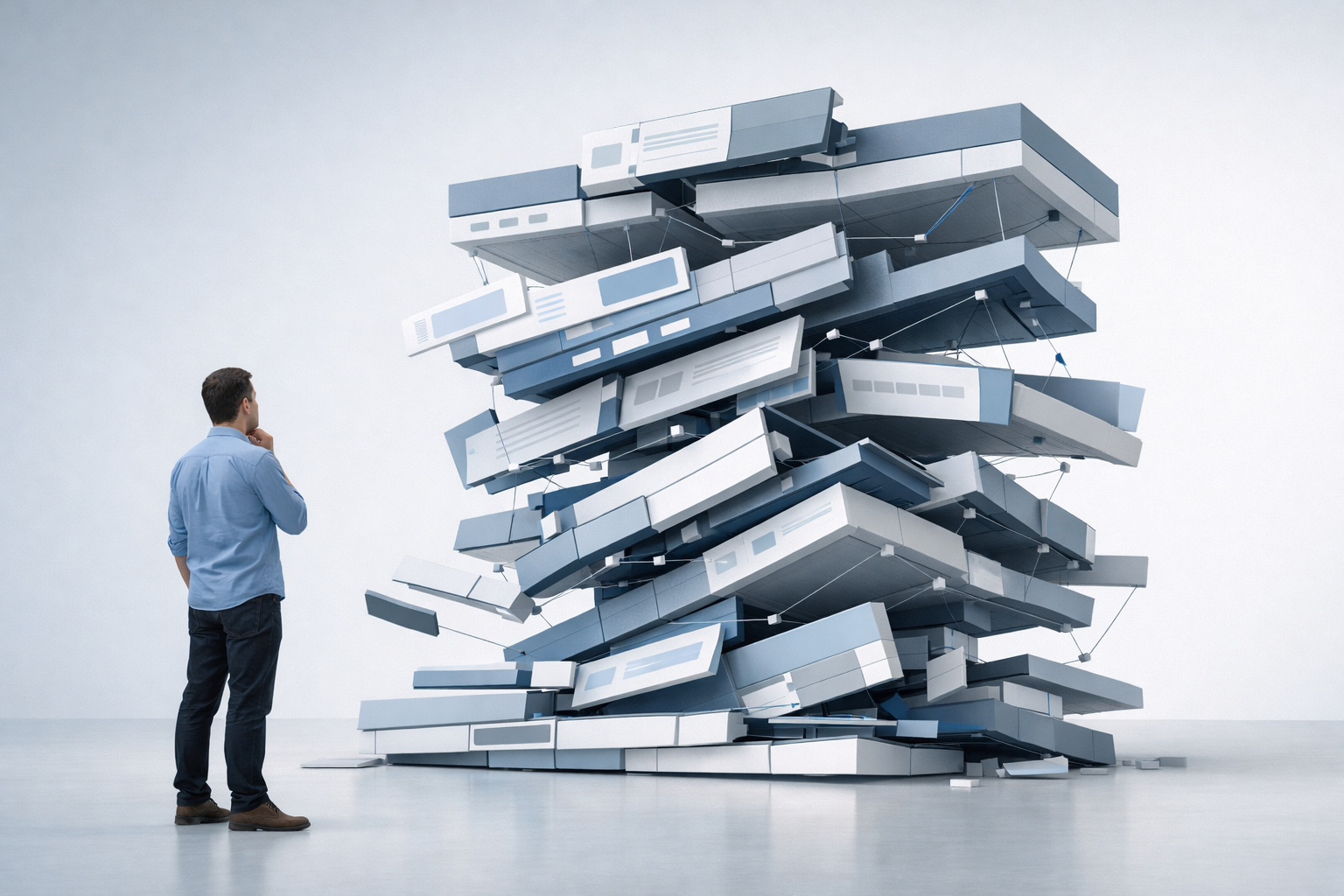

Where does the disconnect lie? The crux is in the architecture of no code systems. Designed for maximum speed and minimal friction, these platforms optimize the creator experience, not the resulting page’s alignment with the demands of search engines or AI-driven content discovery. No code builders offer tremendous ease in helping people build website without coding, but rarely go beyond the surface when it comes to technical requirements for actual online visibility.

Common SEO Traps Hiding in No Code Website Builders

Most users are not aware that the so-called SEO optimization functions of no code builders can be superficial, incomplete, or even broken. These problems rarely show up during the creation process, they become apparent only after the site is live, unindexed, or outright missing from search results.

Let’s explore the real-world scenarios that frequently surface for users of best website builder without coding platforms:

- Phantom Sitemaps: A platform may proudly display a “sitemap generated” message, but visiting /sitemap.xml shows either a 404 error or an outdated file missing recent pages and changes. Google or Bing never discovers the complete site.

- Invisible Metadata: You diligently fill in title tags and meta descriptions in the no code builder’s settings, expecting these snippets to improve your rankings. When analyzing the page’s source, you find these tags are missing, malformed, or overridden by template defaults.

- Structured Data Fiascos: Many creators attempt to inject structured SEO information, like FAQ schema or product structured data, via prompts or widgets. These implementations often fail basic validation or place schema blocks in the wrong spots, rendering them unreadable to search engines.

- Robots.txt Misconfigurations: An editor may show a robots.txt file “ready for Google,” while the published site file blocks crawlers with a Disallow: / directive by default, accidentally telling search engines to ignore your entire site.

- Broken Canonical Tags: A canonical tag in a no code platform frequently points to the platform’s default URL, a generic home page, or duplicates the path incorrectly. This canonical confusion can result in Google skipping indexing of crucial pages, relegating the site to search limbo.

- Unexpected Overwrites: Worryingly, many no code platforms roll out silent updates. These updates overwrite custom SEO settings, erasing carefully crafted meta tags or reverting robots.txt changes back to their insecure defaults.

The above problems illustrate a consistent theme: visual options in dashboards or prompts rarely ensure the live website’s code, structure, and metadata are correct. The process to build website without coding creates a credibility gap, a disconnect between what is configured and what actually exists when a bot, crawler, or LLM accesses the site.

Prompting Your Way to Problems: The Risks of AI-Powered No Code Editing

No code website builders have integrated AI at a dizzying speed. Most now feature ways to prompt the system to “improve SEO” or “generate meta tags.” While this appears as a leap forward, the results are unpredictable and occasionally disastrous.

When you prompt a no code platform for metadata changes or SEO optimization, several painful side effects may occur:

- Invalid Code Output: Some AI-powered no code systems generate malformed meta tags, resulting in duplicate titles, orphaned descriptions, or code that never gets injected into the live site’s header.

- Overlapping Settings: Updating a site’s meta description via prompt often overwrites HTML headers, causing global navigation menus to break or vanish entirely.

- Collateral Damage: Changing robots.txt in the AI interface can inadvertently alter JavaScript variables necessary for loading widgets or analytics. Website functions break for no apparent reason.

- Feedback Loop Failures: Adding structured SEO details sometimes resets unrelated parts of the settings, forcing previous manual configurations to vanish. This cyclical regression frustrates both creators and search professionals.

- Unintended Accessibility Nightmares: As new visual features launch through an AI prompt, aspects like alt text or ARIA labels often disappear, reducing accessibility compliance and eroding SEO aeo intent.

These compounding issues surface because prompts ask for specific changes in a way that the underlying codebase was not architected to handle flexibly. Unlike a developer, who hand-writes logic to incorporate SEO best practices into the site’s theme or framework, AI-driven interfaces attempt on-the-fly adjustments without considering dependencies. The result: a cascade of bugs, regressions, and vanishing elements, all from trying to optimize.

The speed with which AI-enhanced no code platforms introduce these opportunities makes it tempting to believe in their SEO claims. Yet, at every step, prompting exposes the core weakness of such systems, the absence of layered, persistent, and tested logic for search visibility.

Real SEO, AEO, and AI Discoverability: The Missing Links in No Code

To truly achieve online visibility, a website must communicate with both search engines and AI chatbots using signals and structure that these systems trust. This task is where no code platforms routinely fall short, regardless of how easy they make it to build website without coding.

Consider what Google, Bing, and ChatGPT need to index, surface, and highlight web content:

- Accurate, Readable Metadata: Titles, descriptions, canonical links, and open graph tags must appear cleanly in the page source, not just in a dashboard field.

- Crawlable Sitemaps and Robots Files: Search engines and AI chat assistants navigate a site via robust sitemaps and clear robots.txt instructions, these must actually exist and be up-to-date.

- Structured Data Markup: FAQ, HowTo, Review, and Product schemas need to reach a high standard of validity, with logical placement in the code, for rich snippets and AI overviews.

- Clear Search Intent and Conversational Cues: Search Generative Experience (SGE) engines and GPT-based chatbots pull from conversational cues, questions, and topical clusters embedded in page metadata.

- AEO Compatibility: Answer Engine Optimization (AEO) demands a site delivers direct, answer-ready data that is both machine-readable and credible by AI standards.

- Support for LLMS.txt: With generative AI shaping “how search works,” a live and accurate LLMS.txt file signals that large language models may crawl and process your content appropriately.

Most no code platforms lack the robust backend needed to ensure structured SEO or authoritative signals for AI and search. Instead, they prioritize getting a site live fast, great for testing an MVP, not for sustained business visibility.

According to Search Engine Journal’s 2026 trends report, over 67% of sites launched via low code and no code platforms experienced indexing delays or complete invisibility in Google’s index, mainly due to improperly formed metadata, missing sitemaps, or robots directives. Similarly, Ahrefs’ latest technical SEO guidelines state that while best website builder without coding platforms claim “instant SEO,” they tend to help only at a surface level, advanced logic, structured SEO, and conversational optimization require direct code access or highly specialized solutions.

The rise of conversational search and AI-driven content discovery further exposes the gulf between what these platforms promise and what they deliver for lasting site performance.

Case Studies: Where No Code SEO Fails (And a Few Lessons from the Field)

To understand the day-to-day realities, it helps to look at real-life business examples using no code platforms for web presence. Here’s what typically happens:

Scenario One: The Vanishing Startup

A founder uses Durable, a no code platform, to quickly launch a landing page and a handful of service pages. The builder allows for quick edits and drag-and-drop section reordering. The startup spends time prompting for SEO, filling out “Google snippet” fields, and generating an “About” structured schema.

A month after launch, the site fails to rank for brand queries. On inspection:

- The sitemap URL returns a 404 error.

- The site’s meta description in the dashboard is not present in the HTML source.

- The robots.txt file blocks all crawler access.

- Google Search Console shows zero impressions.

Result: The website exists but can’t be discovered by search engines. Lesson learned: surface-level SEO fields mean nothing if not correctly deployed site-wide and kept intact through platform changes.

Scenario Two: The Agency Black Box

A digital marketing agency uses a no code solution (Mixo) to deliver quick-turnaround sites for multiple small business clients. The agency manager assumes the platform’s structured SEO block works across all client projects.

On deeper review:

- Internal links generated for siloing disappear after a prompt-based update.

- Schema for product listings fails validation due to improper JSON-LD tags.

- One website’s LSMS.txt file is completely absent.

After complaints of “not being found,” the agency invests hours using third-party audits to discover and fix gaps. The fix? Manually exporting sites and adding real code-based SEO, negating the “no code” promise.

Scenario Three: The AI-First Content Creator

A professional creator leverages Framer AI Sites for a series of long-form blog articles, relying heavily on AI-generated FAQs and topical clusters. On reviewing Google’s view of the content:

- Most “meta questions” appear in the dashboard, but none are rendered in actual page markup.

- Multiple canonical tags point to inconsistent URLs, causing duplicate content flags.

- Adding new AI features breaks currently live internal linking structures.

The net effect is that AI chat systems ignore the content, and organic search fails to recognize page authority. Structured SEO was missing at every step, even though it appeared otherwise in the platform’s UI.

In all these scenarios, the convenience of no code comes at substantial risk. Users aiming to build website without coding find themselves spending more energy on patching SEO issues after launch than they would have spent hard-coding best practices from the start.

Building for Visibility: What Works and What to Avoid in a No Code World

The allure of the best website builder without coding remains undeniable for time-strapped founders, entrepreneurs, and agencies managing dozens of quick-launch sites. But the quest for SEO optimization and AI-ready discoverability demands a more critical approach.

Here’s a quick breakdown for creators, agencies, and business owners:

What Rarely Works:

- Trusting dashboard prompts or AI-generated “SEO” to establish lasting visibility.

- Expecting correct implementation of metadata just because the UI supports inputs.

- Relying solely on AI for structured SEO or schema markup.

- Assuming robots.txt, canonical tags, and sitemaps are properly handled without independent verification.

What Sometimes Helps:

- Using the “export code” or “advanced editor” options, if available, for adding or fixing technical SEO specifics after the initial build.

- Installing third-party SEO extensions (when supported), but keeping in mind that update rollouts can break these enhancements unexpectedly.

- Manual audits through Google Search Console, Ahrefs, or even using “view page source” to verify what code actually exists live.

What Consistently Works:

- Hybrid approaches: Building rapid layouts with a no code, then enlisting an SEO specialist or system with direct code access (such as automated SEO SaaS platforms) to ensure the deeper layers of visibility.

- Regularly checking for correct metadata, sitemaps, robots directives, canonical implementation, and schema, then monitoring these post-update.

- Maintaining backup settings and documentation for every change prompted within the platform.

Business Owners and Agencies Need to Prioritize:

- SEO and AEO Stability: Steer away from platforms that can’t guarantee metadata, robots, and structured data are present as code, not just in a visually pleasing dashboard.

- Proof of Indexing: Check that all pages are indexed by Google, Bing, and appear in tools like Search Console and Bing Webmaster Tools.

- Consistency Across Updates: After every template or feature update, verify that previous SEO configurations remain intact.

- Transparent Control: Favor platforms (or paired systems) providing direct access to the code or at least advanced APIs for technical configuration.

By focusing on these approaches, creators shift away from the seductive but unreliable promise of pure no code SEO prompts and embrace solutions fit for the realities of a competitive digital marketplace.

FAQ: No Code Website Builders, SEO Disasters, and What You Can Do

Even though you might have entered metadata or clicked on SEO optimization fields, no code builders often don’t write these elements into your site’s actual source code. The visible settings in dashboards rarely guarantee the live site honors them, meaning Google and other search engines can’t detect or trust your site, which leads to de-indexing or invisibility.

SEO fields in no code dashboards are just form inputs. Real SEO requires those values to manifest as correctly rendered HTML meta tags, structured data schemas, working sitemaps, and robots files that exist at format-compliant URLs. Without this technical layer, your SEO isn’t truly “on the web.”

While AI prompts may generate content that sounds SEO-friendly, they lack the ability to implement persistent, code-level changes necessary for search engines or AI bots to discover your site. These fixes may briefly surface in a dashboard, but updates can erase them or create new issues elsewhere.

You can check using browser “view source” to see if meta tags and structured SEO markup appear as expected, and visit /robots.txt and /sitemap.xml to validate their existence and accuracy. Tools like Google Search Console and Bing Webmaster Tools also show which of your pages are indexed and reveal missing metadata.

Leverage no code platforms for fast development but turn to specialized, automated SEO tools or experienced professionals who can audit, repair, and persistently implement all required metadata, structured data, and indexing signals. Consider solutions that bridge your no code builder with automated technical SEO systems for reliable, long-term site health.