Understanding the Google num=100 Update and Its Major Ripple Effect on SERP Tracking

When Google quietly removed support for the num=100 parameter in September 2025, the search ecosystem experienced an immediate, industry-wide shift. The num=100 update may sound technical, but its reality, by capping standard Search Engine Results Page (SERP) queries to just 10 results per page, caused shockwaves through SEO agencies, website operators, and reporting platforms. Historically, this single parameter enabled SEO tools and agencies to pull large lists of results in one request, letting them assess site rankings across up to 100 keyword positions per search. Overnight, data transparency and familiarity evaporated.

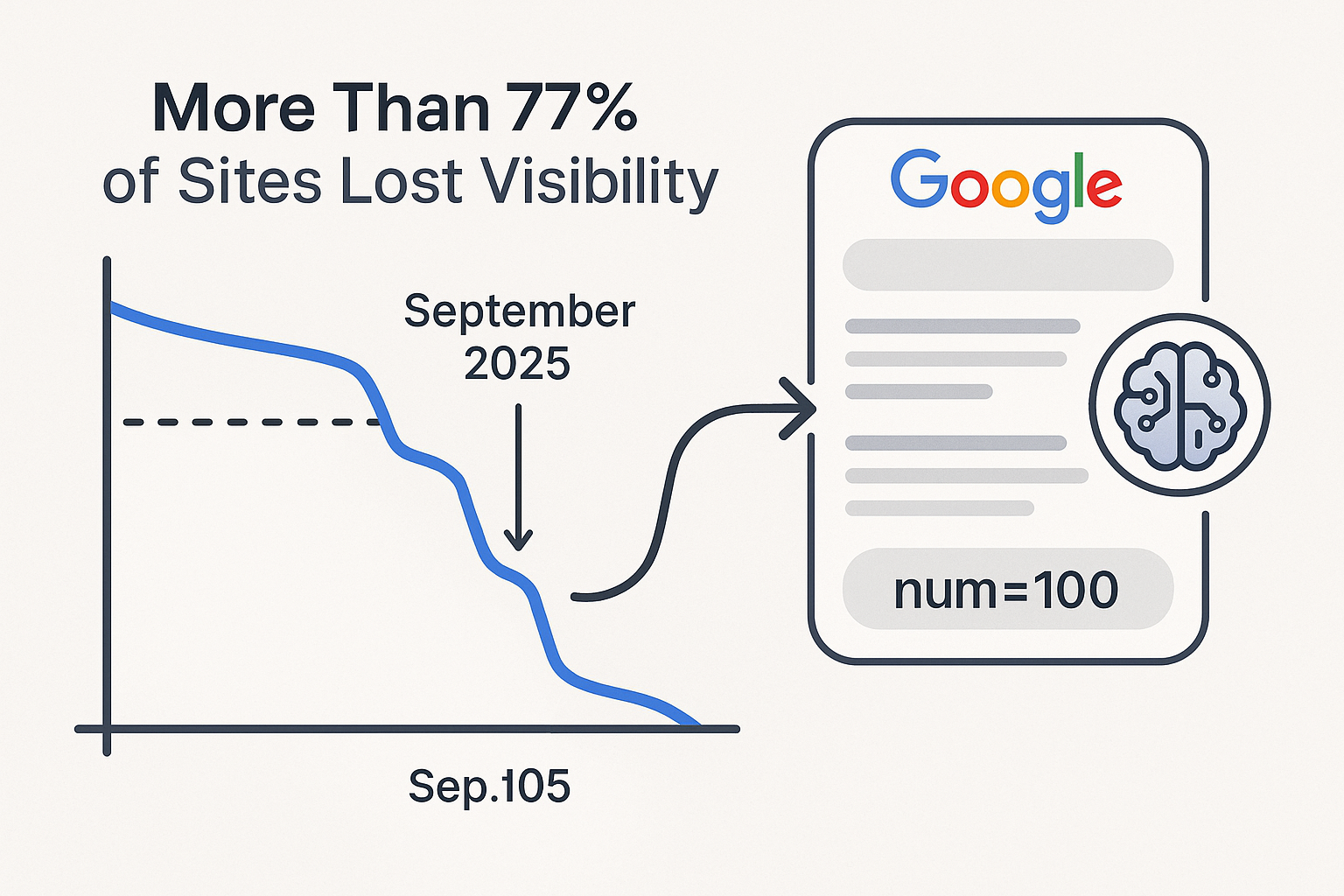

Suddenly, 77.6% of tracked sites lost unique keyword visibility, and 87.7% experienced steep drops in Search Console impressions. Such drastic numbers were highlighted by trusted industry outlets like Search Engine Land and reinforced by mainstream coverage on MSN. For agencies, businesses, and in-house SEOs, the Google num=100 update delivered not just a technical change, but a new paradigm for how visibility data is collected, interpreted, and reported.

This new landscape creates fresh challenges, opportunities, and an urgent need to rethink SEO strategy, operational tactics, and client communications. This article explores the technical reasons behind the update, its real-world impact, adaptation strategies, and the rising importance of AI-first SEO in an era of limited SERP tracking.

How the num=100 Parameter Shaped SEO, and Why Its Removal Matters

In the years leading up to Google’s rollout of this policy change, the num=100 parameter held a critical, if often background, role in SEO data collection. When appended to a Google search URL, num=100 signaled to Google’s servers to display or deliver up to 100 organic results per page, rather than the default 10. For hands-on SEO professionals, agencies, and software providers, this feature allowed comprehensive rank tracking, deep search analytics, and large-scale site audits to happen efficiently, and at scale.

Many of the largest SEO tools and platforms integrated the ability to fetch this expanded data, thus showing users where their domain ranked across the top 100 (sometimes more) keyword positions. Monthly and year-over-year reporting, competitive benchmarking, and strategies for climbing past page one all hinged on this access. The parameter also allowed for tracking long-tail visibility, capturing smaller yet valuable traffic opportunities well beyond the coveted top 10.

With the September 2025 removal, Google defaulted all standard result pages to show a maximum of 10 blue links. Tools relying on a single query to surface deep rankings immediately became less useful for monitoring anything past page one. Data pipelines that previously delivered full-funnel keyword tracking could now only reflect the visible, high-volume tier, blurring the nuances between competitive jockeying and real searcher experience. The introduction of this Google SERP data limit created an immediate technical barrier for platforms and agencies who, until that week, could efficiently collect and analyze rankings across broad sets of terms.

From an engineering perspective, Google’s move makes sense. By restricting high-frequency programmatic scraping, it reduces infrastructure load and aligns output more closely with what real users see in practice. There’s also speculation that Google’s strategic reasoning is tied to rolling out more AI-driven results, as the company pivots toward Search Generative Experience (SGE), AI Overviews, and conversational interfaces, competing directly with LLM search tools like ChatGPT and Perplexity.

The secondary effect? Historic data is now out of sync with new reporting. A site that previously tracked hundreds of distinct keywords per month may show sudden and dramatic gaps in visibility, regardless of actual performance or underlying ranking strength. This disconnect between pre- and post-update data introduces confusion, challenges long-term trend analysis, and changes how results must be interpreted moving forward.

Real-World Impacts: Data Loss, Confusion, and the New Reporting Challenge

When a global search engine alters how ranking data is served, the dominoes fall quickly. The Google num=100 update forced immediate, tangible trade-offs for how SEO data is collected and reported, impacting everyone from enterprise analysts to SMB business owners and SEO tool providers. There are several key dimensions to this shift.

First, analytics platforms and SERP monitoring tools that previously relied on extracting deep sets of ranking data through the num=100 parameter now must crawl one results page at a time, each yielding only 10 results. This does not just decrease efficiency; it fundamentally changes what can be tracked, how often, and at what cost. For large-scale rank tracking, across hundreds or thousands of keywords, for example, crawl budgets and platform scaling become far less economical. Tools that choose to continue extended tracking may need to fetch and render each results page sequentially, exponentially increasing server and bandwidth requirements.

Second, the change distorts year-over-year (YoY) comparisons, monthly reviews, and historical visibility baselines. Many agencies and brands immediately noticed “visibility loss” in the form of reduced keyword counts, search impressions, and tracked positions in Google Search Console. A site that once measured presence across 450 keywords in the top 100 might suddenly appear (in dashboards and reports) to only cover 45, all in the top 10. While the visibility of the best ranking terms remains, deeper insights into niche, upcoming, and long-tail performance become much harder to access.

For agencies and internal marketing teams, the reporting headache has been acute. Clients, stakeholders, and executives may see sudden traffic or impression drops and suspect performance failures. Disconnected reporting, broken comparisons, and unexplained dips in key performance indicators (KPIs) all create pressure for new education and reframing, especially for businesses with executive oversight or board reporting cycles tied to quantifiable progress.

Simultaneously, benchmark visibility with competitors becomes harder. Since all ecosystem actors are now limited to data for the top 10, the ability to meaningfully compare extended keyword sets, assess market share in deep results, or spot emerging players beyond the first page is sharply curtailed. For those in highly competitive industries where top-20 and top-30 rankings signal momentum, this creates an additional layer of opacity.

It is important to note that all SEO technology platforms have had to reevaluate their data models, including SaaS solutions like NytroSEO. While NytroSEO’s Google-specific tracking is now confined to first-page (top 10) results, extended visibility for Bing and Yahoo remains unaffected for now. Users of all major SEO tools should expect further system adaptations as software providers adjust crawling methods and processing policies in response to these industry-wide restrictions.

NytroSEO users should be aware that although pricing plans, including any lifetime deals, currently remain unchanged, there may be future adjustments to crawling frequency, daily data collection quotas, or system run limits. These measures are necessary to absorb increased Google-related crawl costs and ensure long-term sustainability, all within existing plan terms and the platform’s EULA and Terms of Use. This shift is entirely outside the control of SEO technology vendors, being driven solely by Google’s infrastructure and policy decisions. NytroSEO has issued communication outlining its continued commitment to rapid adaptation and advanced AI automation, however, customers may experience some evolving limits on report depth and frequency as the ecosystem stabilizes.

For a sector built around rich data, granular analysis, and multi-touch attribution, the Google num=100 update fundamentally reordered how performance can be measured and what success looks like. It marks a turn toward greater focus on top-10 excellence, while reducing visibility into lower, yet still valuable, search positions.

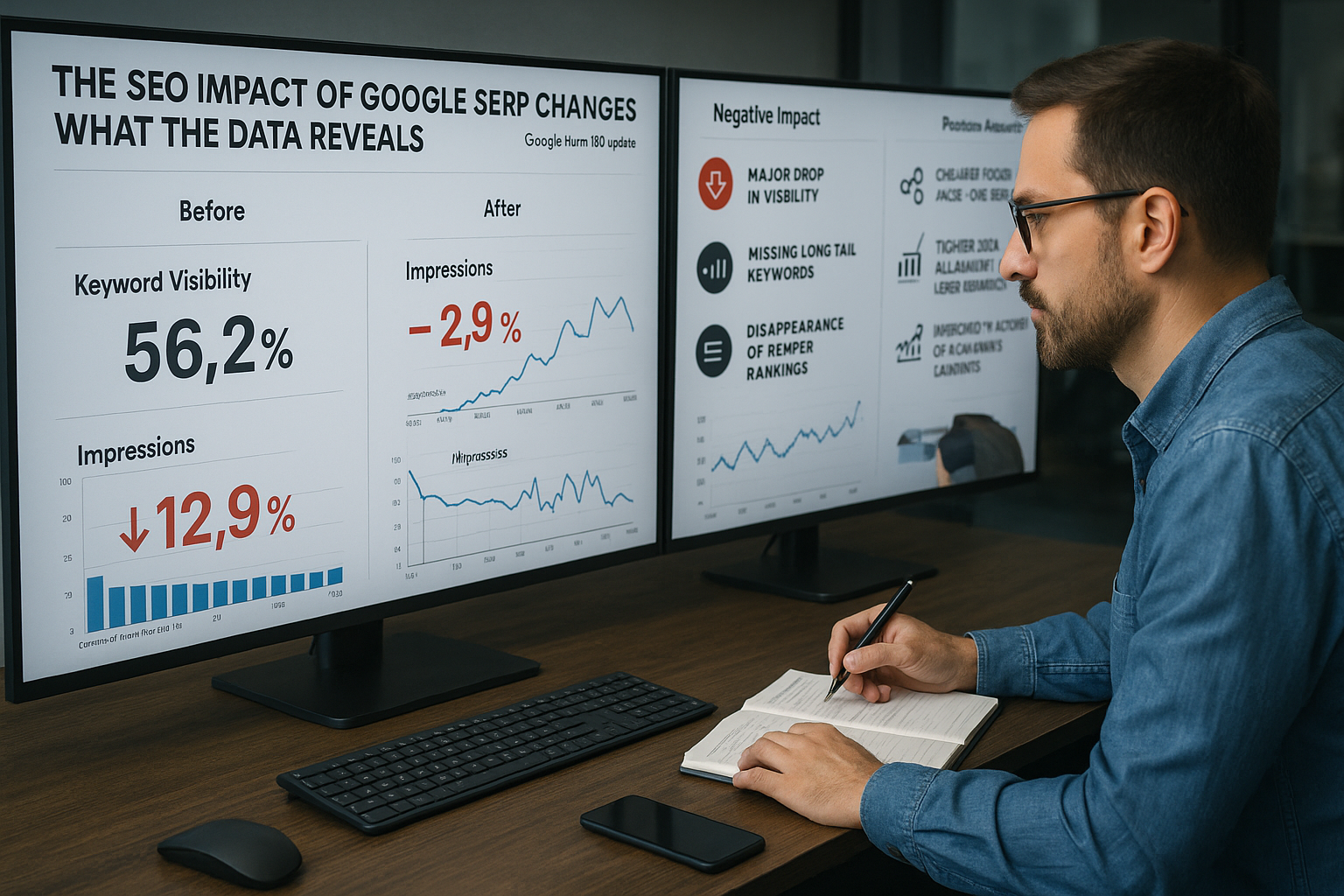

The SEO Impact of Google SERP Changes: What the Data Reveals

Examining the core SEO impact of Google SERP changes introduced by the num=100 update reveals both immediate and long-term transformations. According to cross-industry data and research cited by Search Engine Land in 2025, over three-quarters of sites tracked suffered losses in keyword visibility. Google Search Console echoed these findings with close to 88% of sites showing a drop in total impressions. These numbers are supported by MSN’s technical breakdown and agency reports, which detail the scale and granularity of the disruption.

One of the most visible effects is the so-called keyword visibility drop Google now delivers by default. Businesses and agencies accustomed to finding their domains somewhere in the first 100 positions, often used for monitoring progress as keywords climb toward the coveted first page, find those “up-and-coming” terms expunged from data tables. This undermines longstanding strategies built around steady incremental improvement, as only page-1 rankings remain visible for Google.

This change also upends the very architecture of SEO tools affected by Google changes. Many relied on a single, efficient query to aggregate a full spectrum of keyword positions. Now, as the new default restricts to 10 results, tools face a binary choice: adapt to a far more fragmented crawl pattern or risk losing depth in monitoring. For some, this presents an existential challenge, especially for those selling rank tracking or performance analytics at scale. Budgetary and infrastructure implications are also significant, especially for SaaS SEO platforms and agencies managing high volumes of clients with complex reporting needs.

Impression counts, as recorded in Google Search Console, have dropped commensurately. Since the platform only records impressions for results actually served to users, reduced keyword ranking depth means fewer queries accounted for in aggregate visibility metrics. This reality, referenced as Google Search Console impressions drop in industry forums and guides, demands thoughtful education for clients and decision-makers. The impression loss does not, in itself, signal a performance collapse, just a recalibration of what data Google is willing to display and for which queries.

Rank position data has also become more “real” in that it closely reflects positions that matter to users. Many experts see upside here: the inflation of keyword counts through num=100 overrepresented minor placements that never drew actual clicks. By centering attention on positions that account for nearly all the organic search traffic, the changes provide tighter alignment with user experience.

Still, this comes at the cost of insight. SEO practitioners will find it harder to spot emerging trends at depth or quickly respond to minor adjustments on the second or third results page. Agencies and businesses that used to track upward momentum, watching as content moved from rank 93 to 52 to 21 before breaching page one, will have to get creative, relying more on partial data, external engines, or advanced queries to reconstruct the bigger picture.

The impact on reporting is also profound. With SERP ranking data limitations now in place, SEO vendors must reframe statements about progress and clarify that post-update dips do not reflect actual ranking deteriorations (unless keywords actually fall out of the top 10). Meanwhile, companies optimized around hitting top-of-first-page positions are now best positioned to reap visibility benefits and capitalize on persistent traffic.

From Data Limits to Dominance: SEO Strategies to Adapt for the 10-Result in 2026

SEO in a post-num=100 world is a navigation of new priorities, revised workflows, and more strategic use of both data and automation. Facing Google SERP data limits requires more than a patch; it calls for a rethinking of how visibility is measured, content is created, and client progress is communicated.

Refine Data Collection and Tracking Workflows

Precisely because major SEO tools affected by Google changes lost direct access to broader ranking data, the first step is retooling their pipelines. Leading solutions now focus on collecting the clearest, highest-value rankings, the actual top 10 per keyword, and structuring all analysis to reflect this. For agencies and businesses with a strong internal reporting culture, this means updating expectation dashboards, documentation, and data pipelines to show a more condensed, but accurate, picture of performance.

At the operational level, tracking as much as possible from other engines, where legacy depth is still supported, provides helpful context. Microsoft Bing and Yahoo, notably, continue (as of late 2025) to support tracking up to the top 100 positions per search. Persistent vendors maintain SERP performance tracking for these alternative engines, giving agencies a slice of competitive intelligence and extended trend insight unattainable through Google alone.

Prioritize Top-10 Excellence and Authority

The ruthless focus on the top 10 results refocuses SEO best practices toward champion-level quality, relevance, and authority. With no direct way to see extended positions in standard Google reporting, page-one optimization becomes the only visible metric that moves the needle.

For content teams, this means sharpening every asset for both user intent and search engine expectations. Deep topical clusters, strategic internal linking, advanced metadata, and EEAT (Experience, Expertise, Authority, Trustworthiness), now more than ever, become critical indicators for ranking. Automated solutions that dynamically optimize code, inject schema, and realign metadata at scale provide the necessary edge when only prime positions matter.

As traditional “climb the long tail” strategies lose supporting data, SEOs must double down on research, targeting, and refinement to push more pages into the page-1 cohort. This is where nimble automation can pay huge dividends, enabling fast adaptation to algorithm updates and SERP volatility.

Reframe Reporting and Client Communication

For agencies and consultants, keyword visibility drop Google is already a hot topic in external communications. It is vital to preemptively clarify to clients and internal stakeholders that newly visible “drops” in reporting reflect how Google serves data, not an inherent decline in ranking strength or campaign progress. Agencies now use visual aids and explainer sessions to contextualize apparent drops, sometimes mapping historic data against new trends for transparency.

Updated reporting must clearly distinguish between actual ranking loss (keywords dropping off page one) and the artificial, parameter-driven data loss caused by the update. Where possible, integrations with Bing and Yahoo facilitate auxiliary trend discussions, showing where progress continues even if Google limits visibility.

Invest in AI-Driven Optimization and Automation

As SERP ranking data limitations retire some parts of the SEO workflow, advanced AI-driven automation becomes a distinguishing asset. Platforms like NytroSEO leverage machine learning to optimize every layer of site code: not just titles and metadata, but also structured data and conversational Q&A markup, all aligned with modern SERP standards.

These platforms also interpret “signal” beyond rankings, analyzing user intent, topical gaps, and semantic relationships even as Google narrows what it shares. Automated schema, FAQ markup, and page-level context analysis continue to improve click-through rates and discoverability, independent of raw position counts.

Monitor Bing and Yahoo for Extended Insights

As of Q4 2025, Bing and Yahoo SERP depth still conforms to pre-update norms, allowing for deeper, broader monitoring and reporting. Agencies and site owners can use these engines as proxy trend indicators, surfacing emerging keywords, content gaps, and technical issues that may be invisible through Google but still matter to overall web visibility.

While Google’s search market share far outpaces competitors, these alternative sources offer a strategic window for experimentation, hypothesis testing, and niche authority building. For multinational brands, Bing’s international footprint brings added value, and for some sectors, Yahoo continues to drive meaningful search volume.

For advanced reporting, mapping Bing and Yahoo extended data into visual dashboards, alongside top-10-only Google results, helps bridge historic comparisons and keep stakeholders appraised of ongoing progress, even with the updated Google ecosystem factored in.

The AI SEO Revolution: Making the Most of Limited SERP Data

The abrupt shift in available ranking data accelerates the trend toward intelligent, AI-driven SEO. As manual monitoring of hundreds of keyword positions becomes less feasible, digital marketers increasingly rely on automation, machine learning, and real-time optimization systems to maintain (and expand) their search footprint.

Interpreting Sparse SERP Signals

In a world where direct feedback from Google is now limited to the first 10 results per keyword, interpreting subtle ranking movements, content decay, or competitive threats requires a new breed of analytical models. Platforms leveraging natural language processing and predictive analytics scan not just the visible SERP, but also user intent, related queries, and topical pathways, surface real opportunities and threats even amid SERP ranking data limitations.

AI-first platforms like NytroSEO go beyond historic rank tracking, using advanced algorithms to draw insights from the content itself as well as from parallel search ecosystems. Their automated engines update meta tags, generate new internal links to shore up topical authority, and apply advanced schema markup, keeping sites algorithm-ready as Google pivots toward AI Overviews and conversational discovery.

Automating Optimization at Scale

With the old manual model of spot-checking deep rankings now obsolete, automation platforms have become instrumental in ensuring sites retain their competitive edge. Machine learning models deployed by leading solutions, including NytroSEO, automatically trigger code, metadata, and structure updates as soon as algorithmic shifts are detected. This dramatically reduces lag in response to updates and ensures only top-quality, AI-relevant content earns and maintains the prime placements now rewarded by Google’s published data.

Such SEO automation platforms also help counteract rising crawl costs imposed by Google’s shift, since platform-driven optimization focuses resources on pages that have immediate ranking impact, rather than distributing effort across hundreds of low-visibility terms.

Rethinking Visibility: Measuring Success in a Top-10 World

SEO teams are learning to adjust their KPIs to reflect the new reality, where presence in Google’s top 10 is not just valuable but the only directly measurable evidence of ranking success. Agencies rethink reporting dashboards, integrating more behavior-based signals (clicks, conversions, dwell time) and comparative data from Bing and Yahoo. They also pay fresh attention to Google Search Console’s new patterns, interpreting Google Search Console impressions drop as a data artifact rather than a performance verdict.

Meanwhile, AI-powered solutions continue to optimize for related outcomes: surfacing high-potential clusters, integrating LLM-generated content snippets, and preparing sites for the emerging realities of AI-powered, conversational, and multi-modal search. The future belongs to those who adapt early, applying both technical and communicative prowess to this new visibility challenge.

According to recent industry research summarized by Locomotive Agency, rapid AI innovation, coupled with strategic investments in automation, will likely separate search winners from also-rans in the years immediately ahead.

FAQ: Navigating SEO After the Google num=100 Update

The Google num=100 update refers to Google’s decision in September 2025 to disable the ability for search queries to display or retrieve up to 100 results per SERP page via the “num=100” parameter. The maximum per page is now 10, substantially restricting how SEO tools and professionals track and collect SERP ranking data.

If your reports or Google Search Console dashboards suddenly show fewer ranked keywords or impressions, you’re not alone. This is due to Google limiting standard result pages to just the top 10 per search, only these positions are now consistently trackable. This change does not necessarily indicate a drop in real ranking performance; it’s a reporting limitation driven by Google’s updated policy.

Not at all. Most likely, your actual rankings at the top of the SERPs have not changed. What’s changed is your ability (and that of your tracking tools) to see and report on keywords outside the top 10. Focus on your visibility and performance in positions 1–10 for each target keyword. For context, nearly all organic clicks still come from these first-page positions.

Yes, as of late 2025, both Bing and Yahoo continue to allow retrieval and reporting of up to 100 results per search query. Agencies and professionals can use these platforms to gain deeper insight into extended visibility, long-tail keyword opportunities, and competitive movement underneath the Google top 10 cutoff.

Agencies should provide proactive, plain-language explanations that clarify the num=100 update as a Google-driven reporting change, not a failure in SEO strategy or execution. Visualizing pre- and post-update data, sharing official industry references, and focusing on the importance of top-10 rankings will help set realistic expectations and preserve stakeholder confidence.

NytroSEO System Impact: What Users Need to Know

The Google num=100 update broadly impacted all SEO tools and platforms, and NytroSEO is no exception. Here’s a clear summary of what NytroSEO users and prospective customers should know about how these changes affect system features, reporting, and ongoing support.

Quick Facts for NytroSEO Users

- No Plan or Pricing Changes: As of now, your subscription, pricing, or lifetime deal with NytroSEO remains unaffected by this industry-wide change.

- First Page Tracking for Google: SERP performance tracking for Google is now limited to the top 10 results (page one) for any keyword, matching the new Google constraints.

- Extended Tracking on Bing/Yahoo: Bing and Yahoo still support up to 100 positions per query. NytroSEO continues to provide deep rank monitoring for these search engines, offering richer competitive and long-tail data wherever possible.

- Crawling & Reporting Adjustments: You may notice minor shifts in crawling frequency, reporting latency, or system run limits. These adjustments are direct responses to changes in Google’s infrastructure and are necessary for system stability and scalability.

- Market-Driven Limits: Remember, these limitations stem directly from Google’s new policies and not by choice of NytroSEO or any other vendor. All software providers have had to adapt accordingly.

Disclaimer

All features, reporting depths, and data policies remain subject to NytroSEO’s Terms of Use and EULA. Should Google, Bing, or Yahoo make further alterations, adjustments will be made transparently and with clear communication.

Ongoing Support & Commitment

NytroSEO continues to invest in rapid adaptation and powerful AI automation to help users thrive, no matter how reporting limits evolve. Our support team is always available for questions, onboarding, and strategy consultation.

NytroSEO FAQ After the Google num=100 Update

No. All existing plans remain as before. Any required system changes will be communicated transparently and are market-driven, not vendor-imposed.

All Google tracking is now limited to the top 10 results by necessity. This affects every major SEO platform, not just NytroSEO.

Yes. As long as Bing and Yahoo don’t apply similar restrictions, extended ranking data remains available and integrated.

Should Bing or Yahoo adopt their own ranking restrictions, NytroSEO will adapt promptly and keep you informed of all changes affecting your reports.

No. NytroSEO continues to optimize onsite technical SEO, metadata, topics, and schema at full capacity. The only changes regard what ranking data Google allows us to show; not how your site is optimized. The focus is now maximizing top-10 visibility.

Contact NytroSEO support at any time via your dashboard or by booking a strategy call to discuss evolving best practices in this new data environment.

Citing Sources & Further Reading (References)

For a comprehensive understanding and industry validation of the Google num=100 update, its data impact, and actionable guidance, consult the following sources:

- Search Engine Land: “Google num=100 impact” (2025)

- MSN: “77% of sites lost keyword visibility”

- Locomotive Agency: What this means for your site

- TechEnvision: Google SERP monitoring trends 2025

These articles provide up-to-date case studies, technical explanations, and data showing the broad SEO impact of Google SERP changes, and offer further context for businesses and agencies navigating the transition to post-num=100 reporting and optimization best practices.