When Design Convenience Undermines Search Visibility in No Code and Low Code Sites

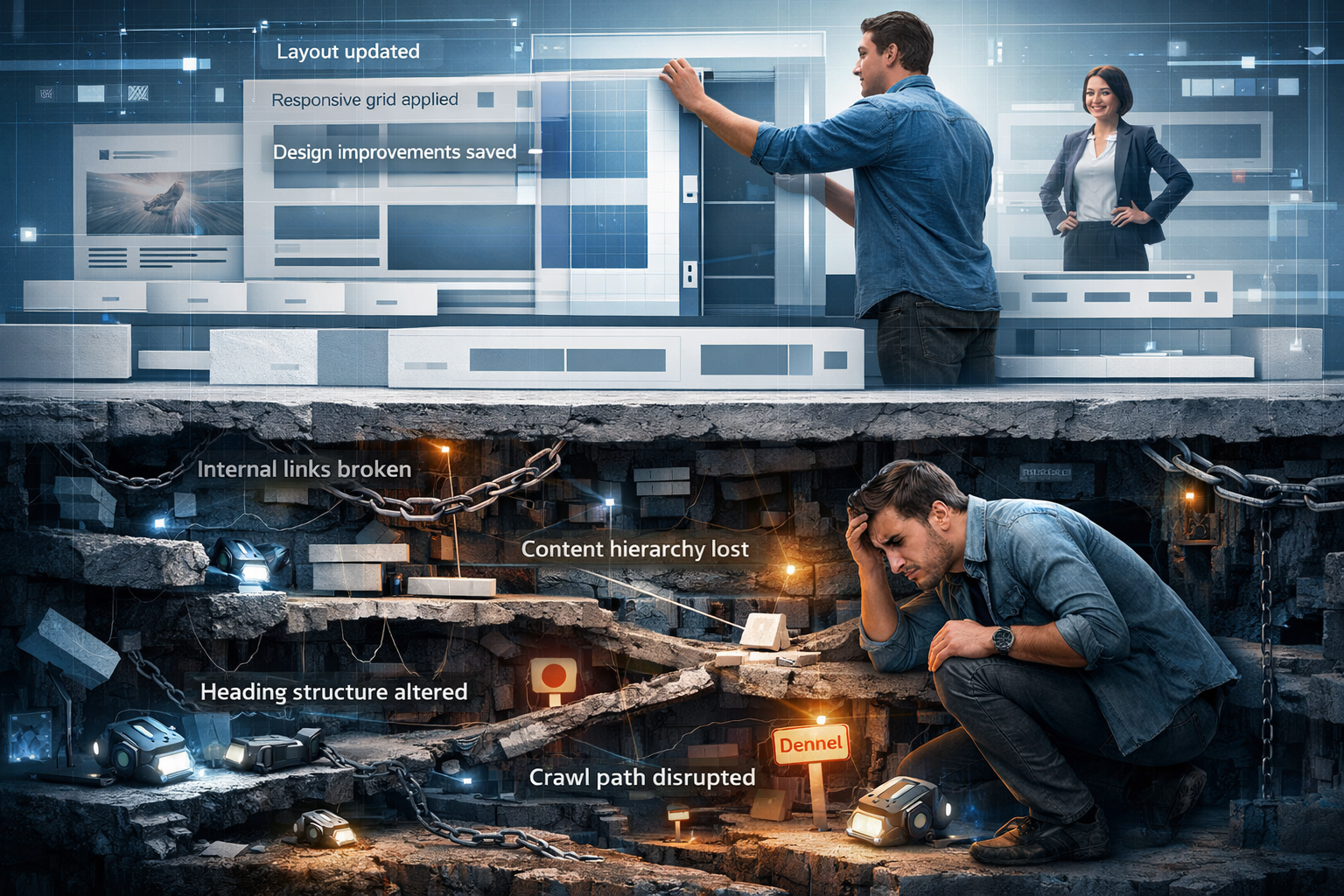

Few changes feel as reassuring as a fresh, modern website layout. With no code and low code website builders like Lovable, Base44, Durable, Mixo, and Framer AI Sites on the rise, updating a layout seems effortless, just pick a new template, move a few blocks, and publish. But there’s a persistent problem lurking beneath this surface simplicity. Each time you adjust or redesign using these platforms, you may be quietly undoing months of progress in search visibility, and not even know it. This article examines why layout changes often sabotage your site’s performance in Google and AI search, outlines the fundamental gaps in website SEO builder logic, and shows how to guard against invisible losses that can erase your ranking momentum overnight.

For creators, founders, agencies, and even developers, the appeal of building sites without code is clear. However, assuming that convenience equals completeness, especially with SEO website optimization, is risky. Understanding these pitfalls and adopting a rigorous approach to on site SEO optimization is now as critical as your design choices, and knowing where low code website SEO falls short could be the difference between thriving online and falling into digital obscurity.

The No Code Revolution: Promise of Simplicity, Reality of SEO Blind Spots

The emergence of no code and low code website builders has dramatically shifted how websites are created and managed. Platforms like Lovable, Base44, Durable, Mixo, and Framer AI Sites offer instant drag-and-drop design, eliminating the technical hurdles of traditional web development. Non-technical creators can now launch a website in hours instead of weeks, often without ever touching HTML or CSS.

This democratization of website creation is driving the digital presence of countless small businesses, entrepreneurs, and service providers. Agencies also capitalize, spinning up client sites at impressive speed using these builder SEO tools. Yet there’s a hidden catch: even as these platforms champion accessibility, their approach to SEO is fundamentally shallow.

Too often, these website optimiser tools treat SEO as a checkbox, a field for meta descriptions, a toggle for sitemaps, an option for robots.txt. What the interface displays, however, doesn’t always translate into the live site’s source code, nor does it guarantee proper alignment with search engine rules or AI search systems. For instance:

- The sitemap listed in the dashboard may not actually exist at yourdomain.com/sitemap.xml, or may be outdated.

- Robots.txt settings may look correct in the editor but end up blocking critical pages from Google.

- Meta titles and descriptions entered in the visual panel can be missing or malformed in your actual site code.

- Structured data, if supported at all, can be incorrectly formatted or not published live.

- Canonical tags, which should prevent duplicate content problems, often contain faulty URLs or don’t update with layout changes.

- Site changes, like swapping out a theme or deleting a section, can overwrite SEO elements that were painstakingly set previously.

This gap between visual confidence and technical reality is compounded by the platforms’ promise of “automatic” SEO: if users are told a feature is enabled, why would they suspect problems? Agencies that deliver websites using these platforms similarly trust that their setup transfers fully to the live site. Unfortunately, search engines and AI chatbots aren’t swayed by what you see, only by the HTML, metadata, and structure they actually crawl.

How AI Prompts and Automated Builders Break Real SEO Behind the Scenes

No code website builders increasingly market themselves as AI-driven, promising not only hands-off creation but also “AI-powered SEO.” Users expecting robust SEO website optimization, as with traditional manual techniques, quickly run into a new set of challenges:

Prompt Confusion: Many platforms let users “prompt” AI to improve SEO, for example, “optimize my meta tags” or “generate a sitemap.” While these sound advanced, under the hood, the builder’s automation usually modifies surface elements, not the underlying logic. Multiple, uncoordinated prompts can trigger accidental regressions.

Common Issues Include:

- Updating a sitemap in the builder accidentally removes or cripples menu navigation.

- Adding metadata via prompts can overwrite crucial header elements needed for CSS or analytics.

- Changing robots.txt to open up search access may instead block essential file types or entire directories.

- Editing main website content resets previous SEO settings or replaces them with generic alternatives.

- Rolling out new AI builder features overwrites or disables previous functionalities that were critical for crawlability.

The root problem is that AI prompts and low code website SEO interfaces rarely understand the intricacies of technical SEO. They lack persistent context, often treating each request as isolated. The platform’s logic is designed for speed, get more content live and boost visual appeal, yet it inadvertently introduces instability and erasure of hard-won SEO ground.

Agencies working in builder SEO environments are particularly challenged, they may hand off a site with proper settings on launch, only to see those SEO elements erased after a minor client update, plugin install, or automated platform change.

The Reality of Search and AI: What Actually Matters Beyond Surface-Level SEO

Search engines and AI chat applications judge websites by precise, behind-the-scenes metrics:

- Metadata: Titles, descriptions, and keywords must be present in every unique page’s HTML.

- Indexing Signals: A valid sitemap.xml and correctly configured robots.txt are essential. Google’s crawlers, as well as AI bots, rely on these files to discover and understand content.

- Structured Data: Schema.org markup for articles, FAQs, local business info, and more is now crucial, especially for eligibility in recent AI search experiences like Google SGE and AI Overviews.

- Canonicalization: Proper canonical tags prevent duplicate content issues and consolidate ranking signals.

- Conversational Cues: AI-driven search and chatbots look for natural language question-answer pairs, relevant entity signals, and “conversational” metadata.

- Continuous Consistency: Site-wide coherence is vital. Every update, layout, content, navigation, must retain or enhance, not break, these SEO foundations.

- AI Discovery: Files like llms.txt (for LLMs) enable or restrict AI crawlers. Improper updates can cut off AI-driven search entirely.

Platforms designed for speed and simplicity often lack the robust systems needed to manage these elements through many iterations. The more you change or prompt, the more fragile your SEO may become. Layout tweaks, new sections, or template swaps frequently disrupt the fragile connection between what you configure in the builder and what search engines consume.

Recent data bears this out. According to a 2026 Search Engine Journal survey,modern site builders lag in technical SEO capabilities, with over 60% of users unaware that “SEO features” they configured didn’t actually deploy to their live website. Another 2026 Ahrefs technical audit found that no code and AI-builder platforms produce almost twice as many indexability errors as traditional CMS deployments.Read more.

Critical Gaps in No Code and Low Code Website SEO You Can’t Afford to Ignore

Despite ongoing product improvements, the biggest names in no code website builders are still missing key pieces of the SEO puzzle that matter to Google, Bing, and new generative AI search platforms:

1. Invisible or Outdated Sitemaps

Many builder SEO dashboards display a sitemap toggle or status, but the real file is missing or not updated with content changes. When you redeploy a site or update layouts, new pages might never get indexed, or worse, deleted pages might still be listed for months.

2. Robots.txt Mismatches

The GUI may show “Allow search engines,” but the real robots.txt in the root directory might block key folders, images, or resources. Template or plugin swaps can even overwrite the file, causing blanket indexation issues site-wide.

3. Lost Metadata and Structured Data

Platforms let users type custom meta tags or add JSON-LD schema in a settings panel. However, pushing a layout update, duplicating a page, or prompting “refresh” can strip these elements, sometimes only on certain device views or template versions. Google then sees blank or default tags, diluting your rankings.

4. Faulty Canonical and Open Graph Tags

No code and low code platforms often auto-generate canonical URLs that are incorrect when switching layouts (pointing to non-canonical, test, or error URLs) or duplicate Open Graph data, confusing search and social crawlers.

5. Non-Persistent SEO Logic

Most platforms offer no audit trail or version control for SEO settings. The next update, plugin addition, or staff member edit can silently revert or delete your optimization work, and the builder offers no warning.

6. AI and Chat Search Misalignment

AI and LLM-driven search now require question-answer markup, topical entities, and language cues built into metadata. Current drag-and-drop and prompt interfaces lack support for these AI search requirements. Merely typing “add FAQ schema” in a builder won’t suffice; it requires precise code logic.

7. Broken Internal Linking and Navigation

Site builders update navigation automatically, but rarely optimize internal links for topical authority or search depth. Conversely, adding navigation typically doesn’t consider SEO fundamentals, sometimes leading to orphaned, unindexed pages.

8. Regressions After Prompts

A prompted change to “update all page titles” might apply a generic set of titles sitewide. Replacing a homepage headline can erase embedded schema or break cross-site canonical references.

9. Accessibility and Mobile-Aware SEO

Despite visual previews, the underlying code may lack appropriate mobile meta tags or ARIA labels, resulting in weak SEO signals for users on search engines and voice search platforms.

10. Missing AI Discovery Files

Files now recognized by AI search engines, such as llms.txt, are rarely supported or correctly maintained in no code environments.

When websites built for speed and creation simplicity ignore these requirements, the damage is cumulative, often invisible until rankings and traffic begin to fall.

Protecting Your Rankings: Strategies for Surviving Layout Updates Without SEO Loss

It’s possible to enjoy the creative benefits offered by modern website SEO builder tools while still safeguarding your rankings, if you address these technical gaps with vigilance and the right resources. Here’s how:

Perform Post-Update Technical Audits

After every major layout change or builder update, use third-party tools like Screaming Frog, Ahrefs Site Audit, or Google Search Console to crawl your live site. Compare sitemaps, robots.txt, meta tags, structured data, and canonical URLs with your intended configurations. If you can’t see it in the HTML “View Source,” it probably doesn’t exist for crawlers either.

Keep a Settings Version Log

Document your SEO settings before every layout, template, or plugin change. Track what’s applied and compare to what’s live after updates. Manual logs help spot silent reversions.

Use External Sitemap Generators and Host Separately if Needed

If your builder cannot guarantee an up-to-date sitemap, create one with an external tool and host it at yourdomain.com/sitemap.xml, then submit directly to Google.

Validate Robots.txt and Canonical Tags Frequently

Check the live robots.txt at yourdomain.com/robots.txt, ensuring it allows correct page and resource crawling. Confirm canonical tags are pointing to the preferred, crawlable URL, especially after design overhauls.

Prioritize Structured Data and FAQs for AI Search

Modern search demands precise schema for articles, questions, and reviews. Use external validators like Google’s Rich Results Test and re-add or repair missing markup after changes.

Create Consistent Internal Links Manually

Builders automate menus, but you should also manually link to new and important content across relevant pages. This boosts topical authority and discoverability for underlinked pages.

Don’t Trust Builder “SEO Scores”

Ignore vanity “SEO health” metrics in dashboards. Only real-time indexing, crawlability, and sustained rankings count.

Consider Automated SEO Platforms for Scaling

If you or your agency consistently run into builder SEO limitations, integrating an external automated SEO system (like NytroSEO or similar) can bridge the gap, providing real-time tracking, self-healing meta tags, and up-to-date schema regardless of the builder’s quirks.

Communicate Builder Risks to Stakeholders

If you deliver sites for clients, educate them on the difference between interface settings and search-ready code. Set recurring checkups post-launch.

Regularly Monitor with Live SERP Data

Track your pages in Google using manual site:domain.com searches and tools that notify you of indexing drops. Failing to catch disappearance early can ruin campaigns.

The simplest way to summarize: Never trust what you see in the builder. Trust only what live crawlers, validators, and search engines confirm.

Future-Proofing Low Code and No Code Website SEO: A Look Ahead

Search technology doesn’t stay still, and neither do the expectations for high-performing sites. As AI becomes ever more integrated with browsing and search discovery, the importance of accurate, persistent SEO intensifies.

Recent moves by Google, Microsoft, and OpenAI, such as requiring llms.txt for AI crawler permission and emphasizing conversational SEO cues, are only the beginning. Sites built for speed with limited technical SEO will find themselves invisible in future AI-driven experiences. Already, data from Moz in 2026 confirms that sites with correct schema and metadata outperform those without by wide margins in AI-overview appearances.

Builder platforms are starting to evolve, but changes are slow. In the meantime, using advanced site monitoring, employing third-party SEO website optimization platforms, and dedicating resources to validate every aspect of on-site SEO optimization are smart moves.

Additionally, consider integrating a website optimiser platform that sits on top of the builder output. The most advanced tools offer real-time, code-level SEO improvements without manual work, fixing missing metadata, structured data, FAQ markup, and even internal linking with each site update. This lets founders, agencies, and creators move fast while keeping pace with the ever-more demanding standards of both search engines and AI discovery systems.

Ultimately, the convenience of launching with no code or low code doesn’t need to mean accepting mediocrity or invisibility in search. It requires vigilance, smart tooling, and a willingness to look past the visual dashboard to what truly matters: what search algorithms actually see, and what they need for your site to win.

FAQ: Clarifying Key Questions About Layout Changes and Low Code Website SEO

When you update your layout using a no code or low code website builder, you may unintentionally erase or distort SEO elements, like metadata, structured data, sitemaps, or canonical tags. These elements aren’t always preserved through builder updates, even though you might see them in the builder’s settings panel. If the live site’s code no longer matches what search engines need, your rankings can drop, sometimes without obvious signs until it’s too late.

Many popular builders provide SEO input fields or checkboxes, but those may not translate into actual HTML for search engines. Common issues include missing or nonfunctional sitemaps, incorrect robots.txt, absent or incorrect structured data, faulty canonical tags, and meta tags that don’t publish live. These gaps mean your site isn’t giving search engines what they require to rank your pages properly.

Regularly audit your live site with external SEO tools and validators after every significant update. Manually check the live HTML for correct meta tags, sitemap presence, robots.txt, canonical URLs, and structured data. Don’t rely exclusively on what the builder dashboard displays; instead, confirm using real-time crawls, search console data, or dedicated SEO optimisation platforms.

Yes, there are advanced SEO platforms that work alongside no code and low code builders. These tools can automatically inject and update correct meta tags, structured data, canonical links, and more, helping protect and enhance your site’s search visibility even as you deploy new layouts or content changes. They effectively bridge the gap between drag-and-drop convenience and technical SEO requirements.

Failing to maintain technical SEO will lead to a gradual decline in search visibility, indexing, and ultimately, your site’s traffic. As AI-driven search and stricter ranking criteria emerge, sites built solely for convenience without ongoing technical SEO validation will find themselves missing from AI overviews, chat results, and high-value Google rankings. Proactive monitoring and automated optimisation are now essentials, not optional extras.